The awaited 42 CFR Part 2 update NPRM has been published in preview form in the Federal Register, and is expected to be published later this week. I finished my tweet through last night, and you can find all the text below.

A thread on the NPRM revising rules on Confidentiality of Substance Use Disorder (SUD) Patient Records #42cfr2_nprm

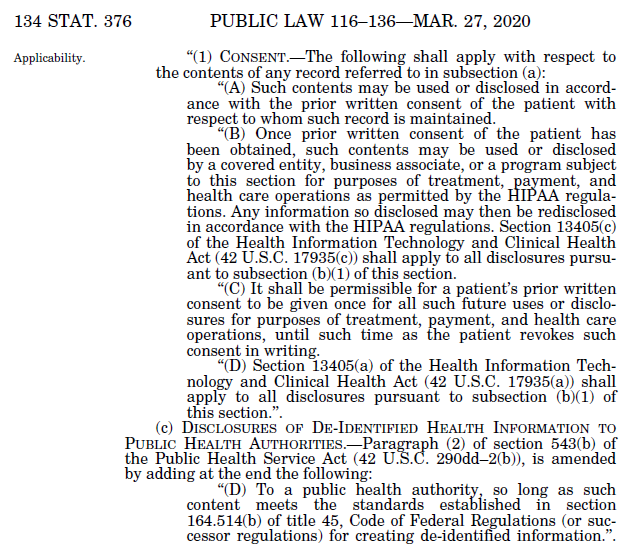

#42cfr2_nprm This rule is required to implement requirements in section 3221 of the CARES act (see https://congress.gov/116/plaws/publ136/PLAW-116publ136.pdf#page=95)

That law changed the consent requirements, simplifying them, but rule changes were necessary to conform. Thus we now have the long-awaited #42cfr2_nprm

There's a 60 day comment period starting soon (official publication in FR), then a final rule, effective 60 days after its publication, and requiring compliance 22 months later. So, all of this would be REQUIRED of entities within about 2.5 years. #42cfr2_nprm

#42cfr2_nprm Is two years enough for compliance (plus the ~ 6 months additional warning this NPRM gives you)? @HHSGov specifically asks this question on page 8.

#42cfr2_nprm Major proposals include: aligning more with HIPAA in various places, including noting that this rules does not prevent anything required by HIPAA, referencing HIPAA content, or revising existing HIPAA content for use in this rule.

#42cfr2_nprm Major proposals (cont.): Apply breach notification requirements for records covered by part 2,

align w/ HIPAA accounting of disclosures, patient right to request restrictions, HIPAA authorizations with 42CFR2 Consents, ...

#42cfr2_nprm next one is huge: replace ... requiring consent for uses & disclosures for payment & certain health care operations with permission to use & disclose records for TPO with a single consent ... until ... patient revokes ... in writing

HIPAA NPP and Part 2 SUD Notice to patients are being aligned under #42cfr2_nprm

And NOTE, this rule also mods some regs under HIPAA too in minor ways to attain better uniformity across both.

I'm guessing that post-implementation, this page https://hhs.gov/hipaa/filing-a-complaint/index.html will undergo revisions, so that there's one place to complain the secretary regardless of whether it's a HIPAA or Part2 violation.

hhs.gov

45 CFR 164.520 (HIPAA Privacy stuff) will undergo some changes to enable alignment with #42cfr2_nprm for NPP, Accounting of Disclosures, but will wait on some HITECH stuff to complete before finalizing privacy rule provisions to make implementation easier on affected entities.

"The existing Part 2 regulations do not permit the disclosure of Part 2 records for public health purposes", but #42cfr2_nprm would "permit Part 2 programs to disclose de-identified health information to public health authorities." NOTE: De-identified = HIPAA 45 CFR 164.514(b)

There's a big chunk of stuff in the #42cfr2_nprm about court orders for disclosure for various purposes. This is not my bailiwick, both literally and figuratively.

And now we have completed the bulk of our reading, and get to @HHSGov's request for specific feedback at page 115 for the #42cfr2_nprm

This is followed at page 126 by a summary of what I just summarized (mine is shorter).

I'm going to skip the cost benefit analysis in #42cfr2_prm; b/c that takes a while for me to process. It isn't just reading and gut feel and an understanding of history. I make models when these are important to me, and this one is.

But to provide a short summary of the #42cfr2_nprm analysis: The ROI is about 6 years.

The proposed regulatory text for #42cfr2_nprm appears starting at page 202. We aren't going over that today (although I may later).

This is expected to be published in the FR on 12/2

I like #42cfr2_nprm. It will probably get some minor revisions, and possibly some delayed activation dates, but frankly, I think many providers and much of the #HealthIT industry would support its content. From a patient perspective, I find it good, but requiring a deeper reads.

And yes, deeper reads is plural. I read a rule three times. This was just my first cursory read-through/tweet through.