I spent the day at the Consumer Health IT Summit kicking off Health IT week in DC. The day was packed with activities scheduled down to 5, 10 and 15 minute blocks of time. Even with an unscheduled break built in they still managed to end the day on time.

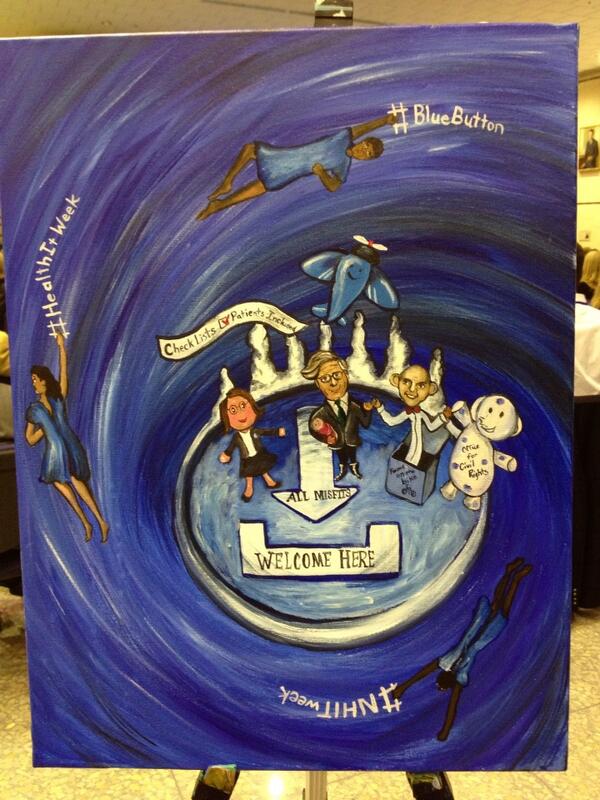

Farzad was our host du jour. He briefly talked about how many of us in the room were misfits, a quote that Regina Holliday immediately riffed on in her art (see the last image).

ePatient Dave gave the keynote, "How Far We've Come: A Patient Perspective". He ran through 40 slides in 15 minutes, an amazing pace. The deck was remarkable for how many different topics it touched on that we've been through over the past few years, and I even got a starring role in a few slides. A lot of what we heard today I've heard before in other settings, but many of the people in the room were hearing it for the first time.

OCR updated

their memo on patient's right to access data just in time for new rules to go into effect Monday of next week (if you need to find that again quickly, it's at

http://tinyurl.com/OCRmemo). They also released new

model notices of privacy practices. They also added a video (below):

The folks over at OCR have been pretty busy I'd say.

There's some new work, "Under Construction" at ONC around Blue Button, and that's a nationwide gateway into the Blue Button eco-system. Patient's will be able to find out from that site how to access their data from providers and payers using Blue Button. Want to be sure your patients can connect to that? Talk to

@Lygeia. We previewed a great video that I would love to be able to use to sign up data holders. It's a shame that at a meetup of several well-connected social media folk, there isn't a list of URLs to redistribute, but well, as Farzad said a few months back, Marketing really isn't the government's forte.

We heard from a number of folks at ONC, CMS, OCR and elsewhere in HHS in the first half of the morning. A couple of key take-aways for me: In recent regulation, CMS proposed paying for coordination of care, a la using meaningful use capabilities like Blue Button. Director of eHealth Standards at CMS Robert Tagalicod was also heard to say that "We are looking at incenting the use of Blue Button for Meaningful Use Stage 3." No surprise to me, but confirmation is always good.

Todd Park (US CTO) popped in for a short chearleading session. If anyone can talk faster about Blue Button than Farzad, it's Todd (I feel comfortable calling him Todd because that's what my daughter calls him).

We had a short unscheduled break because the ONC folk finally realized that four hours in our seats was NOT going to cut it. Someone needs to teach these folks how to run a conference someday. It would also be nice if we didn't have to go through security just to get a cup of coffee (but after

GSA and sequestration, what could we expect). Even so, a quick fifteen minute break and we were back in our seats. Lygeia wields a mean hammer.

The Consumer Attitudes and Awareness Session was the first foray I'd seen where ONC started talking about marketing. I'm still a bit disappointed that so far, ONC is only thinking about targeting people who are already sick in their Blue Button marketing efforts, I really think that we need to change our culture, and that means

starting with our youth. I'll keep hounding them and anyone else who will listen until I get my way there. I can teach an eight-year-old to write a HIPAA letter. Why shouldn't we start with eighteen-year-olds.

ONC announced the winner of the Blue Button Co-Design Challenge:

GenieMD. The app looks good and it has the one main thing I want, it uses an API to access my data. I'll have to take a look at it later.

We heard from a panel of eHealth Investors. I couldn't help but feel that they are still disconnected from patients. The issue of monetization of eHealth seems to have two places to go, either get more revenue dollars from patients, doctors or anyone else who has it to spend, or take a cut of the savings. I don't think any one of these investors realize that the Healthcare market is saturated with places to spend money, and adding one more will only take in a little bit, where as the opportunities for SAVING money are probably a lot more lucrative, and could readily pay for the investment. The more products that are built to deliver patients, patient data, or health data to someone else for bucks, the more silos we simply create in our healthcare delivery system. We've got to think about ways to make money by freeing up things and breaking down barriers. As I tweeted during that session:

And that was retweeted a dozen times.

After lunch we met up again to do some work on Outreach and Awareness to Consumers, including getting into some of the marketing details around the new program, and doing some A/B testing with the audience.

Overall, it was a good day. Now I sit in my hotel room in Philly, writing this post, and preparing for tomorrows

ePatient Connections conference where I'm speaking and on a panel. It's not my usual venue, but I have a soft-spot for Philly since my family is from here, and I grew up outside the city. So, I'm taking a personal day for the conference here, and then I'm back home for a few days. After that, it's the worst way to attend an HL7 conference: Drive in every day, and home every night. I'll get to see my bed, but probably not any of my family while they are aware. I'll be at the HL7 Working Group meeting in Cambridge.

And to polish it all off, here is Reggie's art: With Lygeia, ePatient Dave, Farzad, and Leon all getting ready to depart the Island of Misfits.

Fitting I think (or should that be misfitting). Farzad will be leaving ONC on October 5th, and had

this advice to offer his successor (I think she'll do just fine).

-- Keith

P.S. I am very sad that today was so marred by the attacks at the Navy Yard. That was about a half mile from where we all were sitting. The Police presence in the city as we headed over to Tortilla Coast was not quite as scary as Boston a week after the bombings, but still close enough. I'm not used to walking around a city where automatic rifles are carried at patrol ready and police are standing on every block.